Connect-IB adapter cards provide the highest performing and most scalable interconnect solution for server and storage systems. High-Performance Computing, Web 2.0, Cloud, Big Data, Financial Services, Virtualised Data Centres and Storage applications will achieve significant performance improvements resulting in reduced completion time and lower cost per operation.

Details

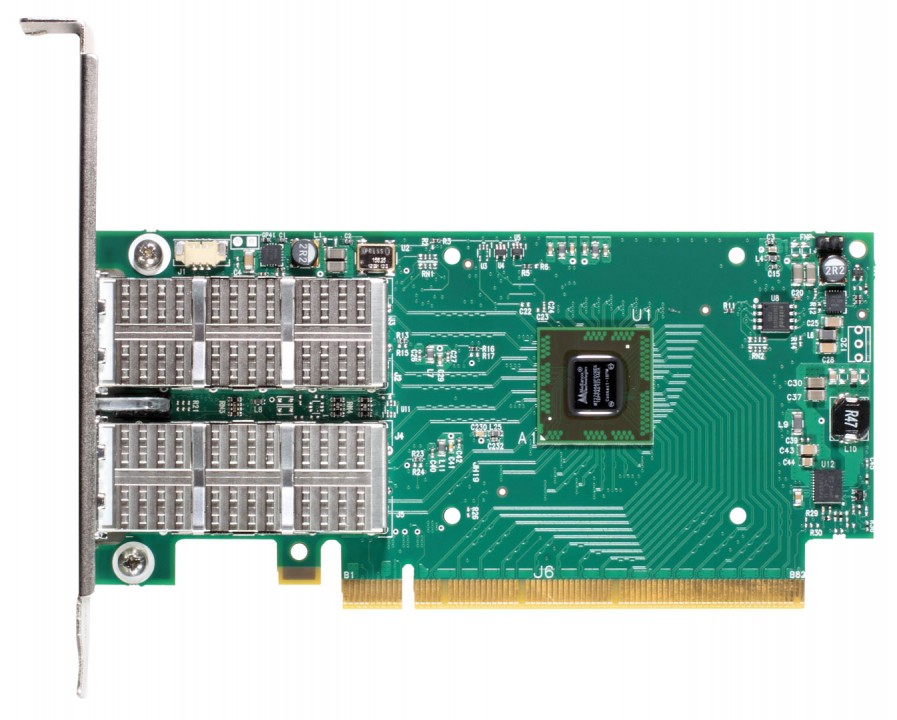

Connect-IB Single/Dual-Port InfiniBand Host Channel Adapter Cards

Connect-IB adapter cards provide the highest performing and most scalable interconnect solution for server and storage systems. High-Performance Computing, Web 2.0, Cloud, Big Data, Financial Services, Virtualised Data Centres and Storage applications will achieve significant performance improvements resulting in reduced completion time and lower cost per operation.

Connect-IB delivers leading performance with maximum bandwidth, low latency, and computing efficiency for performance-driven server and storage applications. Maximum bandwidth is delivered across PCI Express 3.0 x16 and two ports of FDR InfiniBand, supplying more than 100Gb/s of throughput together with consistent low latency across all CPU cores. Connect-IB also enables PCI Express 2.0 x16 systems to take full advantage of FDR, delivering at least twice the bandwidth of existing PCIe 2.0 solutions.

Connect-IB offloads the CPU protocol processing and the adta movement from the CPU to the interconnect, maximising the CPU efficiency and accelerate parallel and data-intensive application performance. Connect-IB supports new data operations including non-continuous memory transfers which eliminate unnecessary data copy operations and CPU overhead. Additional application acceleration is achieved with a 4x improvement in message rate compared with previous generations of InfiniBand cards.

#Benefits

- World-class cluster, network, and storage performance

- Guaranteed bandwidth and low-latency services

- I/O consolidation

- Virtualisation acceleration

- Power efficient

- Scales to tens-of-thousands of nodes

Key Features

- Greater than 100Gb/s over InfiniBand

- Greater than 130M messages/sec

- 1us MPI ping latency

- PCI Express 3.0 x16

- CPU offload of transport operations

- Application offload

- GPU communication acceleration

- End-to-end internal data protection

- End-to-end QoS and congestion control

- Hardware-based I/O virtualisation

- RoHS-R6

| Manufacturer | Mellanox |

|---|---|

| Part No. | MCB194A-FCAT |

| End of Life? | No |

| Advanced Network Features |

|

| Bandwidth | Greater than 100Gb/s over InfiniBand |

| Channels | 16 million I/O channels |

| Host OS Support | Novell SLES, RHEL, OFED, other Linux distributions |

| I/O Virtualisation |

|

| PCI Slot(s) | Gen 3.0 or 2.0 |

| Ports | Up to 100Gb/s connectivity per port |

| Port-Port Latency | 1us MPI ping latency |