Details

AI Infrastructure

A core, new building block for AI infrastructure, the IPU-M2000 is powered by 4 x Colossus Mk2 GC200, Graphcore’s second generation 7nm IPU. It packs 1 PetaFlop of AI compute, up to 450GB Exchange Memory and 2.8Tbps IPU-Fabric for super low latency communication, in a slim 1U blade to handle the most demanding of machine intelligence workloads.

The IPU-M2000 has a flexible, modular design, so you can start with one and scale to thousands. It works as a standalone system, eight can be stacked together or racks of 16 tightly interconnected IPU-M2000’s in IPU-POD64 systems can grow to supercomputing scale thanks to 2.8Tbps high-bandwidth, near-zero latency IPU-Fabric™ interconnect architecture, built into the box.

Designed from the ground up for high performance training and inference workloads, the IPU-M2000 unifies your AI infrastructure for maximum datacentre utilization. Get started with development and experimentation then ramp to full scale production. Available to pre-order today.

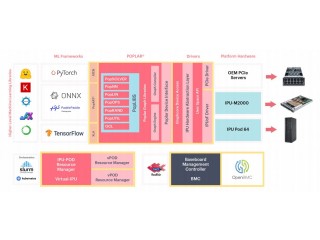

Poplar SDK Software

With Poplar, managing IPUs at scale is as simple as programming a single device, allowing you to focus on the data and the results.

Our state of the art compiler simplifies IPU programming by handling all the scheduling and work partitioning of large models, including memory control, while the Graph Engine builds the runtime to execute your workload efficiently across as many IPUs, IPU-Machines or IPU-PODs as you have available.

As well as running big models across large IPU configurations, we’ve made it possible to dynamically share your AI compute, with Graphcore’s Virtual IPU software. You can have tens, hundreds, even thousands of IPUs working together on model training. At the same time, you can allocate your remaining IPU-M2000 machines for inference and production deployment.

Scaleable

The IPU-M2000 has a flexible, modular design, so you can start with one and scale to thousands.

Directly connect a single system to an existing CPU server, add up to eight connected IPU-M2000s or with racks of 16 tightly interconnected IPU-M2000s in IPU-POD64 systems, grow to supercomputing scale thanks to the high-bandwidth, near-zero latency IPU-Fabric™ interconnect architecture built into the box.

| Part No. | IPU-M2000 |

|---|---|

| Manufacturer | Graphcore |

| End of Life? | No |

| Rack Units | 1 |

| CPU Socket(s) | 1 |

| Compatible CPU(s) | ARM Cortex A-Quad-Core SoC. Super low latency IPU-Fabric Interconnect |

| Max # Core(s) | 4 |

| Memory Slot(s) | 2 x DDR4 DIMM DRAM |

| Memory Capacity | Up to 450GB Exchange Memory |

| PCI Slot(s) | 4 x Colossus MK2 GC200 IPU |

| LAN Socket(s) | RocEv2/SmartNIC Connector |

| Graphics Cards | Up to 10 NVIDIA® Tesla® V100 16GB & V100 32GB Up to 8 Graphcore IPU's |