Intelligent RDM-enabled network adapter card with advanced application offload capabilities for High-Performance Computing, Web 2.0, Cloud and Storage platforms.

Details

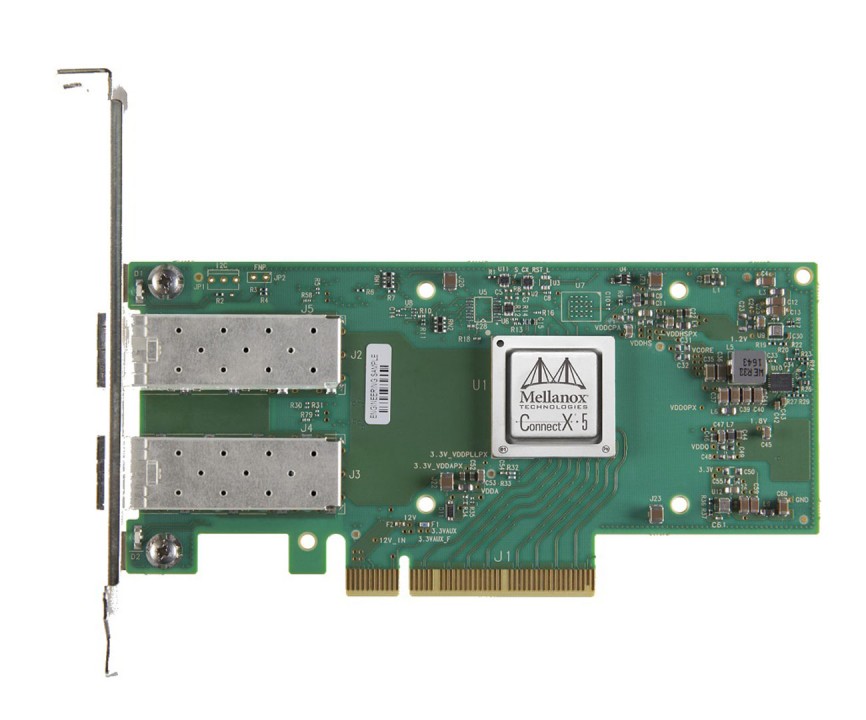

ConnectX-5 Single/Dual-Port Adapter supporting 100Gb/s with VPI

Intelligent ConnectX-5 adapter cards belong to the Mellanox Smart Interconnect suite and supporting Co-Design and In-Network Compute, providing acceleration engines for maximising High Performance, Web 2.0, Cloud, Data Analytics and Storage platforms.

ConnectX-5 with Virtual Protocol Interconnect supports two ports of 100Gb/s InfiniBand and Ethernet connectivity, sub-600 nanosecond latency, and very high message rate, plus PCIe switch and NVMe over Fabric offloads, providing the highest performance and most flexible solution for the most demanding applications and markets.

ConnectX-5 enables higher HPC performance with new Message Passing Interface (MPI) offloads, such as MPI Tag Matching and MPI AlltoAll operations, advanced dynamic routing, and new capabilities to perform various data algorithms.

Moreover, ConnectX-5 Accelerated Switching and Packet Processing (ASAP2) technology enhances offloading of virtual switches, for example, Open V-Switch (OVS), which results in significantly higher data transfer performance without overloading the CPU. Together with native RDMA and RoCE support, ConnectX-5 dramatically improves Cloud and NFV platform efficiency.

Mellanox offers an alternate ConnectX-5 Socket Direct card to enable 100Gb/s transmission rate also for servers without x16 PCIe slots. The adapter's 16-lane PCIe bus is split into two 8-lane buses, with one bus accessible through a PCIe x8 edge connector and the other bus through and x8 edge connector and the other bus through an x8 parallel connector to an Auxillary PCIe Connection Card. The two cards are connected using a dedicated harness. Moreover, the card brings improved performance by enabling direct access from each CPU in a dual-socket server to the network through its dedicated PCIe x8 interface.

Benefits

- Industry-leading throughput, low latency, low CPU utilisation and high message rate

- Innovative rack design for storage and Machine Learning based on Host Chaining technology

- Smart interconnect for x86, Power, ARM, and GPU-based compute and storage platforms

- Advanced storage capabilities including NVMe over Fabric offloads

- Intelligent network adapter supporting flexible pipeline programmability

- Cutting-edge performance in virtualised networks including Network Function Virtualisation (NFV)

- Enabler for efficient service chaining capabilities

Key Features

- EDR 100Gb/s InfiniBand or 100Gb/s Ethernet per port and all lower speeds

- Up to 200M messages/second

- Tag Matching and Rendezvous Offloads

- Adaptive Routing on Reliable Transport

- Burst Buffer Offloads for Background Checkpointing

- NVMe over Fabric(NVMf) Target Offloads

- Back-End Switch Elimination by Host Chaining

- Embedded PCIe Switch

- Enhanced vSwitch/vRouter Offloads

- Flexible Pipeline

- RoCE for Overlay Networks

- PCIe Gen 4 Support

- Erasure Coding offload

- IBM CAPI v2 support

- T10-DIF Signature Handover

- Mellanox PeerDirect communication acceleration

- Hardware offloads for NVGRE and VXLAN encapsulated traffic

- End-to-end QoS and congestion control

- Hardware-based I/O virtualisation

| Manufacturer | Mellanox |

|---|---|

| Part No. | MCX555A-ECAT |

| End of Life? | No |

| Advanced Network Features |

|

| Bandwidth | Max 100Gb/s |

| Channels | 16 million I/O channels |

| Host OS Support | RHEL, CentOS, Windows, FreeBSD, VMWare, OFED, WinOF-2 |

| I/O Virtualisation |

|

| Supported Software | OpenMPI, IBM PE, OSU MPI (MVAPICH/2), Intel MPI, Platform MPI, UPC, Open SHMEM |

| PCI Slot(s) | Gen 4.0, 3.0, 2.0, 1.1 compatible |

| Ports | Up to 100Gb/s connectivity per port |

| Port-Port Latency | Sub 600ns latency |

| IEEE Compliance |

|

| RoHS | RoHS R6 |