Over the past decade, deep learning has qualitatively changed the way we view machines. From computer vision to medicine, from autonomous driving to astronomy, the ever-expanding applications of deep learning seem like only a stretch on the surface of a universe of new possibilities.

However, due to the black-box nature of deep learning, we naturally raise the question of whether deep learning is safe and robust with these safety-critical and security-critical tasks. In this post, I will talk about what is safety and robustness of deep learning, and how verification methods can help with this problem.

Back in 2013, Szegedy et al.[1] first proposed the concept of adversarial examples: fooling machine learning models into making wrong predictions by adding slight noise to the input that may be imperceptible to humans.

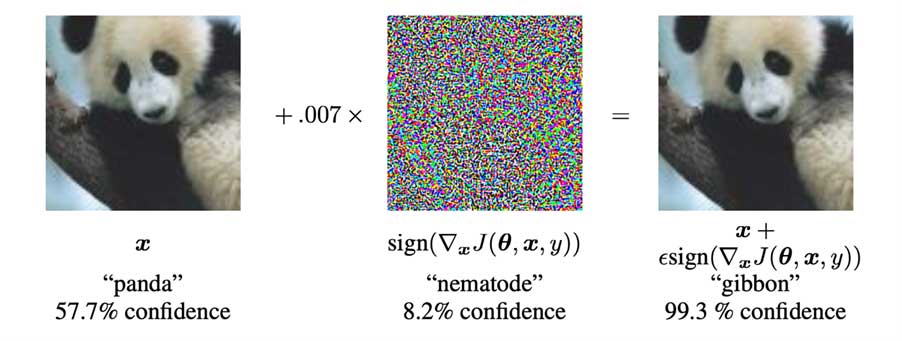

Goodfellow et al.[2] later showed that it is very easy to find such adversarial examples, such as this panda recognized (with high confidence) as a gibbon when some minor noise that’s pretty much invisible to humans is added:

Similar results have been shown on more concerning datasets and applications. Eykholt et al.[3] showed that small stickers taped to a stop sign can fool a vision system to recognize the sign as a “Speed Limit 45” sign, implying that this might lead to wrong and dangerous actions taken by an autonomous car.

These discoveries demonstrated the safety and security problems we currently have with neural networks, and in a nutshell, the robustness problem arises as we use these deep learning models without understanding how they make prediction internally and how they actually learn.

Luckily, motivated by these works, there is a huge line of active research on robustness of neural networks including adversarial attacks, adversarial defences, and robustness verification, which we will go over each in depth below.

Adversarial attacks aim to efficiently generate adversarial examples and adversarial defences work on developing guard against such adversarial examples. Naturally it’s a never-ending arms race between the two research areas, with many interesting methods proposed. For attacks, there are black-box and white-box attacks. In white box attacks the attacker has access to the model’s parameters, whilst in black box attacks, the attacker has no access to these parameters, i.e., it uses a different model or no model at all to generate adversarial images with the hope that these will transfer to the target model.

One successful category of white-box attacks is gradient-based attacks, exploiting the gradient of the model’s parameters. Some famous gradient-based attacks include Fast Gradient Sign Method (FGSM), Projected Gradient Descent Attack (PGD) and C&W (Carlini and Wagner). There methods are some of the most effective adversarial attacks to date.

For defence, there are four main categories: augmenting training data with adversarial examples, detecting adversarial examples/attacks, incorporating randomness in the model and filtering adversarial examples with projections. The first category, also the most common defence, trains the model with adversarial examples obtained from attack methods. Also, a min-max approach which iteratively generates adversarial examples with PGD attack while training the model is proposed to improve the performance and efficiency.

Another interesting category is incorporating randomness. Because adversarial perturbations can be viewed as noise, randomness can be introduced to various parts of the model such as the input, the hidden layers or straight on the parameters of the model to improve robustness.

Last but not least, there are a family of different verification methods for adversarial examples, meaning to verify that small perturbations to inputs should not result in changes to the output of the neural network. There are two main categories of verification methods: constraint-based and abstraction-based. Constraint-based verification takes a neural network and encode the verification problem as a set of constraints that can be solved by a Satisfiability Modulo Theories (SMT) solver or a Mixed Integer Linear Program (MILP) solver.

The benefit is that the results are exact, but the methods suffer from exponential growth in computation time with respect to the size of the neural network, limiting their scalability. Abstraction-based verification on the other hand is aimed for scalability; these methods employ abstract domains (intervals) to evaluate the neural network on sets of inputs, relaxing the verification problem to gain speed.

We have introduced the problem of robustness of neural networks, introduced different lines of works on robustness, and hopefully by the end you have gained a comprehensive overview of robustness. Business Systems International (BSI) is the largest Nvidia GPU supplier in Europe and we provide custom solutions of complete AI Machine Learning environments that enable the training of complex machine learning models as well as robustness analysis on these models.

To effectively run AI systems, you need the highest performing hardware. BSI offers various types of AI optimised servers running on GPUs, which can all be configured to your exact specifications. You can find out more information on these solutions here or by getting in touch on +44 207 352 7007.

This article was provided by our AI researcher Bill Shao.

To learn more...

BSI is a Dell Technologies Titanium Partner. Our Dell Technologies AI solutions can be viewed here and our AI inception programme here. Get in touch to discover how we could optimise your business with AI.

Article references:

[1] C. Szegedy et al., “Intriguing properties of neural networks,” 2014.

[2] I. J. Goodfellow, J. Shlens, and C. Szegedy, “Explaining and harnessing adversarial examples,” 2015.

[3] K. Eykholt et al., “Robust Physical-World Attacks on Deep Learning Models,” Jul. 2017, doi: 10.48550/arxiv.1707.08945.