Details

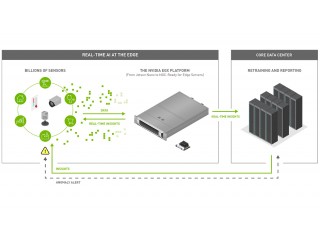

Enterprises are now operating at the edge. On factory floors. In stores. On city streets. In urgent care facilities. At the edge, data flows from billions of IoT sensors to be processed by edge servers, driving real-time decisions where they’re needed.

All of this is possible—smart retail, healthcare, manufacturing, transportation, and cities—with the NVIDIA EGX A100 platform, which brings the power of accelerated AI to the edge.

Runs AI Containers

The NGC registry features an extensive range of GPU-accelerated software for EGX, including Helm charts for deployment on Kubernetes.

NGC containers cover the top AI and data science software, tuned, tested, and optimized by NVIDIA.

The registry also gives users access to third-party domain-specific, pre-trained models and Kubernetes-ready Helm charts that make it easy to deploy powerful software or build customized solutions.

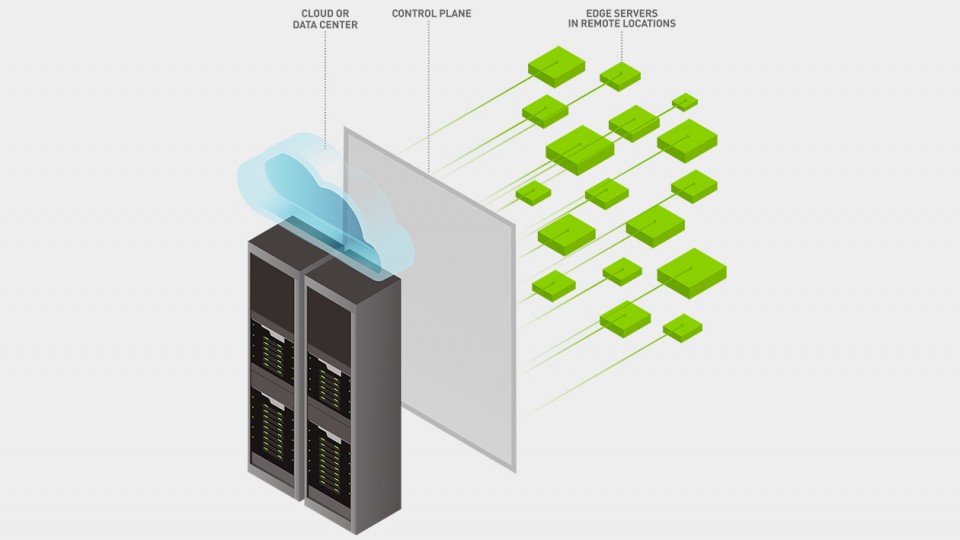

Cloud Native, Edge-First

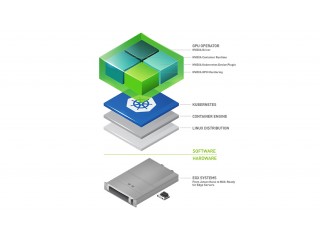

NVIDIA EGX is a cloud native, edge-first, and scalable software stack that enables IT to quickly and easily provision GPU servers.

A primary component of EGX A100 is the NVIDIA AMPERE GPU Operator, which standardizes and automates the deployment of all the necessary components for provisioning GPU-enabled Kubernetes clusters.

EGX-Ready Servers

The EGX hardware portfolio starts with the tiny NVIDIA® Jetson Nano™, which in a few watts can provide one-half trillion operations per second (TOPS) of processing for tasks such as image recognition.

And it scales all the way to a full rack of NVIDIA T4 servers, delivering more than 10,000 TOPS to serve hundreds of users with real-time speech recognition and other complex AI experiences.

AI Scalability from Edge to Data Center

The NVIDIA EGX platform includes a scalable range of NGC-Ready for Edge validated servers—from the Jetson Nano handling traffic cameras in a smart city, to a single edge server with NVIDIA GPUs installed in the back room of a retail store, to an entire fleet of servers comprising a micro data center for telecommunications operations.

Whichever form factor, the NVIDIA EGX stack is compatible across the board, allowing businesses to scale edge operations quickly and efficiently, from cameras and devices to a remote large-scale data center.

Accelerating the IoT Revolution with AI and 5G

5G is ushering in a new era of wireless communications that delivers 10X lower latency and 1000X the bandwidth.

It also introduces the concept of network slicing, which will allow telecommunications companies (telcos) to dynamically offer unique services to customers.

As telcos transition to 5G, they’ll look to operate a range of software-defined applications at the telco edge—from AI and augmented reality to virtual reality and gaming.

With NVIDIA EGX and the new NVIDIA Aerial SDK, GPU-accelerated and software-defined virtual radio access networks (vRANs) is arriving sooner than you think.

IT Simplicity for Edge Deployment

With the NVIDIA EGX Stack, traditional IT issues of installing software on remote systems disappear. Using Helm charts, containers, and continuous integration and continuous delivery (CI/CD), organizations can now deploy updated AI containers effortlessly in minutes.

The Kubernetes-based NVIDIA GPU Operator solves the problem of needing unique operating system (OS) images between GPU and CPU nodes; instead, the GPU Operator bundles everything you need to support a Tesla GPU — the driver, container runtime, device plug-in, and monitoring with deployment by a Helm chart.

Now, a single gold master image covers both CPU and GPU nodes.

Compatible with Kubernetes Management Platforms

The ability to deploy, scale, and manage a production-grade, enterprise-class Kubernetes cluster is critical. The EGX stack architecture is supported by leading hybrid-cloud platform partners: Canonical, Cisco Container Platform (CCP), Microsoft Azure, Nutanix, Red Hat, and VMware.

Their support for the GPU Operator ensures these clusters are configured for GPU workloads and that they perform in an optimal, consistent fashion.

| Manufacturer | nvidia |

|---|---|

| Part No. | NVIDIA-EGX-A100 |

| End of Life? | No |

| Rack Units | 6 |