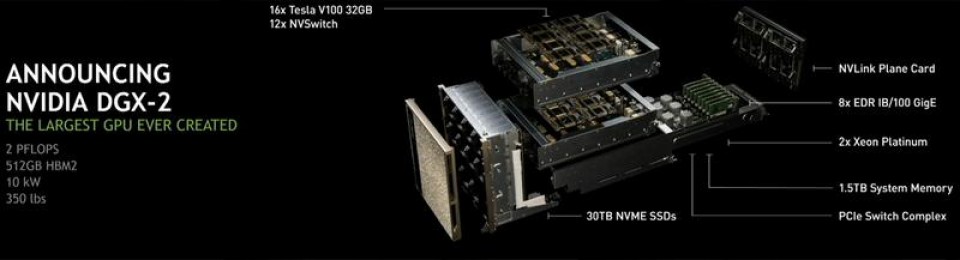

NVIDIA DGX-2 Enterprise AI Research System

The world's most powerful AI system for the most complex AI challenges

[DGX2-2510A+P2CMI00]Details

The Challenge of Scaling to Meet the Demands of Modern AI and Deep Learning

Deep neural networks are rapidly growing in size and complexity, in response to the most pressing challenges in business and research.

The computational capacity needed to support today’s modern AI workloads has outpaced traditional data center architectures. Modern techniques that exploit use of model parallelism are colliding with the limits of inter-GPU bandwidth, as developers build increasingly large accelerated computing clusters and push the limits of data center scale. A new approach is needed—one that delivers almost limitless AI computing scale in order to break through the barriers to achieving faster insights.

Unbeatable compute power for unprecedented training

AI is getting increasingly complex demanding unprecedented levels of compute power. NVIDIA DGX-2 packs 16 of the world’s most powerful GPUs to accelerate new AI model types that were previously untrainable. Groundbreaking GPU scalability, lets you train 4X bigger models on a single node with 10X the performance of an 8-GPU system.

NVIDIA DGX-2 is now available in two models, including a new enhanced DGX-2H - specifically engineered for maximum performance for the most demanding applications. Learn how DGX-2H is the compute building block of DGX-2 POD - the first AI supercomputing infrastructure to achieve Top 500 performance.

NVIDIA NVSwitch—A Revolutionary AI Network Fabric

Leading-edge research demands the freedom to leverage model parallelism and requires never-before-seen levels of inter-GPU bandwidth. NVIDIA has created NVSwitch to address this need.

Like the evolution from dial-up to ultra-high speed broadband, NVSwitch delivers a networking fabric for the future, today. With DGX-2, model complexity and size are no longer constrained by the limits of traditional architectures. Embrace model-parallel training with a networking fabric that delivers 2.4TB/s of bisection bandwidth for a 24X increase over prior generations. This new interconnect “superhighway” enables limitless possibilities for model types that can reap the power of distributed training across 16 GPUs at once.

With DGX-2, model complexity and size are no longer constrained by the limits of traditional architectures. Now, you can take advantage of model-parallel training with the NVIDIA NVSwitch networking fabric. It’s the innovative technology behind the world’s first 2-petaFLOPS GPU accelerator with 2.4 TB/s of bisection bandwidth, delivering a 24X increase over prior generations.

AI Scale on a Whole New Level

Modern enterprises need to rapidly deploy AI power in response to business imperatives and scale-out AI, without scaling-up cost or complexity. We’ve built DGX-2 and powered it with DGX software that enables accelerated deployment and simplified operations— at scale.

DGX-2 delivers a ready-to-go solution that offers the fastest path to scaling-up AI, along with virtualization support, to enable you to build your own private enterprise grade AI cloud. Customers can choose to use either pre-installed Ubuntu Linux Host OS, popular among developers, or install Red Hat Enterprise Linux Host OS if IT teams prefer to integrate DGX-2 with their existing Red Hat data center deployment.

Now businesses can harness unrestricted AI power in a solution that scales effortlessly with a fraction of the networking infrastructure needed to bind accelerated computing resources together. With an accelerated deployment model, and an architecture purpose-built for ease of scale, your team can spend more time driving insights and less time building infrastructure.

Access to AI Expertise

With DGX-2, you benefit from NVIDIA’s AI expertise, enterprise-grade support, extensive training, and fieldproven capabilities that can jump-start your work for faster insights.

NVIDIA and BSIs dedicated team is ready to get you started with prescriptive guidance, design expertise, and access to our fully-optimized DGX software stack. You get an IT-proven solution, backed by enterprise-grade support, and a team of experts who can help ensure your mission-critical AI applications stay up and running.| Part No. | DGX2-2510A+P2CMI00 |

|---|---|

| Manufacturer | nvidia |

| End of Life? | Yes |

| Preceeded By | DGX-1 |

| Rack Units | 2 |

| System Weight | 134 lbs |

| System Dimensions | 866 D x 444 W x 131 H (mm) |

| Packing Dimensions | 1,180 D x 730 W x 284 H (mm) |

| Operating Temperature Range | 10–35 °C |

| EAN | TCSDGX2-PB |

| Specification | TCSDGX2-PB |

| NVIDIA CUDA Cores | 81920 |

| NVIDIA Tensor Cores | 10240 |

| NVIDIA NVLink | 12 |

| Performance | 2 petaFLOPS |

| Compatible CPU(s) | Dual Intel Xeon Platinum 8168, 2.7 GHz, 24-cores |

| No. of GPUs | 16X NVIDIA® Tesla V100 |

| System Memory | 1.5TB |

| GPU Memory | 512 GB total system |

| Storage Capacity | OS: 2X 960GB NVME SSDs Internal Storage: 30TB (8X 3.84TB) NVME SSDs |

| Supported OS | Ubuntu Linux Host OS |

| Ports | Dual 10 GbE4 IB EDR |